Sunday 29 January 2012

Tuesday 24 January 2012

American films beginning with "American"

A recent discussion of Shame led to one of those conversations this not alien to me. I said that I hadn't seen the film, but had read that it was a kind of American Psycho for the teens ... or whatever we are calling this decade (consider other compelling definitions of Shame here and here). Now, sometimes these comparisons are superficially illuminating. So we might say that The Social Network is the Citizen Kane for Generation Y. Something like this is a stepping stone to getting to another point in the conversation - they are a slimy, moss covered rock on which we might precariously balance as the deluge of half-formed opinions and almost-thoughts gush past. They are a way of saying "X is the new black". They are a conversational conceit, to be picked up and dropped as required. Sometimes, however, I don't know when to drop it. Years of having such conversations (starting in their Ur-form when I worked in a video shop) means I find it all too easy to get swept away, and what is a blog good for if it isn't a black box to transform a drunken idea into a tendentious argument. You need some structure for this if it is to be useful, however, so the matrix of my model is the following:

Labels:

American Beauty,

American Gigolo,

American Graffiti,

American Psycho,

Angels in America,

art,

film

Monday 23 January 2012

Outsourcing the self

If social networking is creating a new ecology of communication, how is it doing so? It is effectively a way of outsourcing our connections with other people, whereby we no longer need to worry about doing anything so vulgar as actually having to remember birthdays, or ages, or where you met them, or...in certain cases, what their name is. The upside to this should be that by outsourcing our memory in this manner, then we should have more time free to do more things with these people. We don't need to write a letter, or even an email. Perhaps we think we can just send a message, and all is well. The problem is that this theoretical free time is a black hole. Most have had that that experience of trawling Facebook for what we think is just a few minutes, then *snap* you're back in the room and two hours have passed. How is this? Well, as with all technologies, there are benefits and there are drawbacks. This form of communication is low-grade, and labour-intensive. We may get more connected, but this means more active effort is needed maintain these connections.

Wednesday 18 January 2012

Perfect internet, algorithms and long tails

"There is no standard Google anymore", "invisible, algorithmic editing of the web", "The internet is showing us a world we want to see, not what we need to see." This is what we all glory in, that whatever we want is available to us, the long tail in action. As in the post below, however, the tail has to be attached to something. The long tail says that you can profit from deviating from a norm, but the result of the algorithms that choose for us is that new norm are created. Thus we have the phenomenon of your Facebook page becoming an echo chamber. Such algorithmic editing has swallowed wholesale the arguments behind generation me, as well as the result of the culture wars where all norms are negative. What we have, then, is software as a crypto-morality. "There cannot be such a thing as a norm", this narrative tells us, "because nobody has a right to tell me what's normal!" There is a confusion in our conceptual apparatus, because a quantitive norm is being interpreted as a qualitative norm. There is a case where there is a cross-over, as Hegel tells us, but basic exchange of information requires this standard in terms of brute numbers. There is not a political or ethical agenda to this concept of the numerical norm.

The assumption behind algorithmic editing is that there is a perfect search result. It is a cybernetic version of Plato's forms. There cannot be such a perfect internet experience, however, and we mislead ourselves in even imagining it is impossible. Perfect, in the sense of Lt. perfectus implies completion, of something being finished. This is nonsense, and so perhaps the ideology of perfection that underlies the thought and practice of information technologies should be reminded of the irreducible reality of noise, of that which does not necessarily communicate a message, but without which the message cannot be communicated. We need to be able to go for a stroll with no particular destination in mind. We need that element of play in the hard sense, not hippy-dippy "y'know like whatever man". StumbleUpon curiously tries to inhere play into an algorithm, but for me it lacks that spookily magic sense of achievement when you find something that hasn't been linked by a thousand others already. We must realise there is a fundamental and structural contradiction in terms by attempting to make our online environment perfect for us in all ways because this leads to the isolation everyone on their own personal desert island. This simply establishes us in our own limits. Where then do we meet communally? Where do we have arguments? Where do we hear new stories, or jokes, or gossip? We need to have a norm from which we deviate, we need to have a same for there to be difference.

See also, Web 3.0 : http://en.wikipedia.org/wiki/Semantic_Web

Labels:

information,

limits,

long tail,

open source

Tuesday 17 January 2012

Is open source inevitable? II

[If open source is to have its day, some implications must be examined]

Technologies of privacy:

Transition away from previous economic models. Manufacturing and the mass ownership of capital has been on the wane for generations. Consider the MIT model of spinning-off industries. According to this study "Entrepreneurial Impact: The Role of MIT", if one were to regard companies developed by MIT alumni, collectively they would form the 11th largest economy in the world. Technologies must be proven, thus they must be peer-reviewed as well as tested by the market. If everybody can use the same ideas (goodbye proprietary anything), if open source and the intellectual commons get their day, then the matter of 'economic viability' is set aside in favour of "technical viability" and 'environmental viability'. The new models will have to incorporate recognition that there are diseconomies of scale, and what we called economies of scale were all too often a fetishization of size. This is a realization from the realm of network theory, which brings the long tail to bear on our everyday lives. It is not merely a niche element - the long tail is not long tail, as it were. (E.F. Schumacher had an intimation of this in his collection of essays, Small is Beautiful.)

Technologies of privacy:

- Old style : passive, reactive, the default position.

- New versions?: Opt-in, active privacy; specifically designed ways of deciding what we share, and with whom.

Transition away from previous economic models. Manufacturing and the mass ownership of capital has been on the wane for generations. Consider the MIT model of spinning-off industries. According to this study "Entrepreneurial Impact: The Role of MIT", if one were to regard companies developed by MIT alumni, collectively they would form the 11th largest economy in the world. Technologies must be proven, thus they must be peer-reviewed as well as tested by the market. If everybody can use the same ideas (goodbye proprietary anything), if open source and the intellectual commons get their day, then the matter of 'economic viability' is set aside in favour of "technical viability" and 'environmental viability'. The new models will have to incorporate recognition that there are diseconomies of scale, and what we called economies of scale were all too often a fetishization of size. This is a realization from the realm of network theory, which brings the long tail to bear on our everyday lives. It is not merely a niche element - the long tail is not long tail, as it were. (E.F. Schumacher had an intimation of this in his collection of essays, Small is Beautiful.)

How do we then differentiate between old and new? It becomes a matter of service. Will digital mean that we are all eventually a part of the service economy? We may be able to set our businesses apart according to how we deal with our customers. It may be a matter of approach rather than cost. A fully open source world, with respect for the intellectual commons, is utopian. Too much seems to stand in its way, but elements of this can be used to consider alternatives to developing nations making the mistakes that the industrial and post-industrial nations have made. Consider the principle of the long tail applied to national economies. Of course there will factors that lead a country to be wealthy by virtue of some natural resource, as long as unsustainable practices are maintained, but an open principle towards information will in theory allow innovation to take place anywhere. We see this in the emergence of 'regional hubs' and 'centres of excellence', but the best example yet, in terms of something that will actually last (unlike Dubai), is Singapore. CNN has fifty reasons to account for this (about 20 are compelling, but that's enough for me). For long enough have people considered the first part of McLuhan's "Global Village". We need now to give greater attention to the 'village' part. That is the locus of differentiation, and of what we can manage, to make our actions environmentally and socially sound.

Labels:

commons,

information,

long tail,

networks,

open source

Towards a definition of technology

One popular view holds that the human mind truly came into its own via a "rewiring" (Kevin Kelly in Out of Control, online version here). With this there was an extension of the brain beyond its grossly physical, biological limitations. Technology serves body, body serves brain, with brain and body as a multiplicity serving the genes in the final reckoning. Technology is the overcoming of limits - of whatever kind. That which overcomes limits in whatever mode thus serves us as a technology. We should be able to extend such a provisional definition beyond hammers and wheels and machines. Accordingly, in the most serious sense, art is a technology. A poem, a painting, a dance is a recalibration of purely crude literalism (as a kind of tyranny of an ontology of representation, of reality as a 'given' rather than as a 'made'). Art can move us beyond these assumptions which we have forgotten were once new.

With this, considering all things in terms of limits and our overcoming them, does this open the door to new analyses? Are there examples where it can be said not to apply? In technological terms, what is the function of this definition, and can it tell us anything new? It is basically an engineer's-eye-view, whereby a technology is a solution to a problem. Its function is to solve a problem, the problem being a limit which has been encountered. From this definition, some fascinating implications follow. Technology at this basic level of analysis appears to us as being self-perpetuating. Each technology brings with it a new set of problems, which in turn requires new sets of solutions, and on, and on...literally ad infinitum. We can say then that the first characteristic of technology is that it is autocatalytic. In chemical terms, a reaction is chemically autocatalytic if its product is also the catalyst for further reactions. This is so for technology and as such it manifestly is a source and product of feedback. Edward Tenner examines this in detail in terms of "revenge effects". I am not taking an ethical stance on the matter one way or the other, as Tenner's thesis requires of him. Nor am I suggesting this is simply a corollary of technology. I am saying that this feature is structurally integral to all technologies. (For an alternative overview with an ethical focus, see "The Unanticipated Consequences of Technology" by Tim Healy.)

"Ah, but a man's reach should exceed his grasp,

Or what's a heaven for?..."

The human being is that creature par excellence whose reach exceeds its grasp, except it is the cognitive reach that exceeds the physical grasp. Technology is the attempt to bridge the two. We will never, in our present form, have the steady-state evolutionary form that living fossils such as the coelacanth; in them, reach and grasp coincide perfectly. Homo sapiens, in contrast, are necessarily an imperfect solution to the problem of life (if this can be said without woe-is-us connotations), the problem of adaptation to an environment. The reason for this is that by being sapiens we continually alter our environment such that no steady state becomes possible. We are implicated. Each new product of our mind that enters the world as either a physical or a mental technology is a solution that creates new problems. The cycle cannot stop.

With this, considering all things in terms of limits and our overcoming them, does this open the door to new analyses? Are there examples where it can be said not to apply? In technological terms, what is the function of this definition, and can it tell us anything new? It is basically an engineer's-eye-view, whereby a technology is a solution to a problem. Its function is to solve a problem, the problem being a limit which has been encountered. From this definition, some fascinating implications follow. Technology at this basic level of analysis appears to us as being self-perpetuating. Each technology brings with it a new set of problems, which in turn requires new sets of solutions, and on, and on...literally ad infinitum. We can say then that the first characteristic of technology is that it is autocatalytic. In chemical terms, a reaction is chemically autocatalytic if its product is also the catalyst for further reactions. This is so for technology and as such it manifestly is a source and product of feedback. Edward Tenner examines this in detail in terms of "revenge effects". I am not taking an ethical stance on the matter one way or the other, as Tenner's thesis requires of him. Nor am I suggesting this is simply a corollary of technology. I am saying that this feature is structurally integral to all technologies. (For an alternative overview with an ethical focus, see "The Unanticipated Consequences of Technology" by Tim Healy.)

Problem: solved.

If we return to the human mind, and consider the following. The rewiring that brought about consciousness brought about the ability to think in terms of what is not present, in time and space. It allowed for planning for a harsh winter. It allowed one to consider the potential benefits and dangers the next valley over. It escapes what I above called crude literalism. There are lines in Robert Browning's "Andrea del Sarto" that capture this:"Ah, but a man's reach should exceed his grasp,

Or what's a heaven for?..."

The human being is that creature par excellence whose reach exceeds its grasp, except it is the cognitive reach that exceeds the physical grasp. Technology is the attempt to bridge the two. We will never, in our present form, have the steady-state evolutionary form that living fossils such as the coelacanth; in them, reach and grasp coincide perfectly. Homo sapiens, in contrast, are necessarily an imperfect solution to the problem of life (if this can be said without woe-is-us connotations), the problem of adaptation to an environment. The reason for this is that by being sapiens we continually alter our environment such that no steady state becomes possible. We are implicated. Each new product of our mind that enters the world as either a physical or a mental technology is a solution that creates new problems. The cycle cannot stop.

Labels:

art,

Edward Tenner,

Kevin Kelly,

limits,

literature,

technology

Saturday 14 January 2012

Fantasy and the possible

"Yet the invocation of magic by modern fantasy cannot recapture this fascination, but is condemned by its form to retrace the history of magic's decay and fall, it's disappearance from the disenchanted world of prose, the 'entzauberte Welt', of capitalism and modern times."

- Fredric Jameson, Archaeologies of the Future: The desire called utopia and other science fictions [71]

The major works of serious literary fantasy reflect upon this concept of magic-as-waning (John Crowley, Sheri Tepper, Susanna Clarke, et al.). How can this magic be linked properly to its reserve of power, namely human creative power? This creative power has become alienated, and the dialectic of enlightenment applies here just as much to religion because of fantasy's secular-thus-literary realm of exploration. Well, it ought to explose, but more often the literary fantasy (or just plain fantasy, the literary mulls things over more profoundly than this) will at least reflect on its own alienation.

John Crowley is a prime example of this in both his masterpiece Little, Big as well as the Ægypt cycle. Susanna Clarke upsets our expectations by positing a final waxing of magic rather than its disappearance from the world, which emphasises all the more how the magical truly has waned from our world and even our imaginations. It is striking that Clarke's Jonathan Strange and Mr. Norrell was so acclaimed, but what is even more striking was that its reception seemed always to be accompanied by a note of surprise that this, a book that involved magic, was not hopelessly puerile. We could, this raised-eyebrow subtext suggested, actually enjoy something that wasn't on the Eastenders end of the realism continuum, yet that was not of the dwarves and unicorns variety. It was becoming acceptable to note that there was indeed a literary fantasy that could be read by those coming from the literary vector rather than the other way round, coming from fantasy but being discerning. Previously it was Borges and Calvino who were in this non-purely-realism citadel (acceptable, as Gore Vidal notes regarding another topic, because they don't write in English, which we might call the language of instrumental reason), but others have joined them. One would hope, however, that as fantasy became literary, non-discussions as to the artistic merit of previous bastions should really become moot (such that we can say J.R.R. Tolkien was not "snubbed", he simply didn't deserve the Nobel).

If we can reflect on the passing of magic in a fantasy text, is this more than a shallow generic narcissism? Does it point to an intriguing approach whereby the old Freudian idea allows magic to be regarded as wish-fulfillment, rather than having little to do with the "thinking through of the dialectic" that Fredric Jameson is proposing. The alienation of magic has much to do with the alienating power of technology and reason, how these forces of industry and enlightenment are regarded as inhuman, rather than as the ne plus ultra of humanity. We might make a connection here with Arthur C. Clarke's "Three Laws":

- Fredric Jameson, Archaeologies of the Future: The desire called utopia and other science fictions [71]

The major works of serious literary fantasy reflect upon this concept of magic-as-waning (John Crowley, Sheri Tepper, Susanna Clarke, et al.). How can this magic be linked properly to its reserve of power, namely human creative power? This creative power has become alienated, and the dialectic of enlightenment applies here just as much to religion because of fantasy's secular-thus-literary realm of exploration. Well, it ought to explose, but more often the literary fantasy (or just plain fantasy, the literary mulls things over more profoundly than this) will at least reflect on its own alienation.

John Crowley is a prime example of this in both his masterpiece Little, Big as well as the Ægypt cycle. Susanna Clarke upsets our expectations by positing a final waxing of magic rather than its disappearance from the world, which emphasises all the more how the magical truly has waned from our world and even our imaginations. It is striking that Clarke's Jonathan Strange and Mr. Norrell was so acclaimed, but what is even more striking was that its reception seemed always to be accompanied by a note of surprise that this, a book that involved magic, was not hopelessly puerile. We could, this raised-eyebrow subtext suggested, actually enjoy something that wasn't on the Eastenders end of the realism continuum, yet that was not of the dwarves and unicorns variety. It was becoming acceptable to note that there was indeed a literary fantasy that could be read by those coming from the literary vector rather than the other way round, coming from fantasy but being discerning. Previously it was Borges and Calvino who were in this non-purely-realism citadel (acceptable, as Gore Vidal notes regarding another topic, because they don't write in English, which we might call the language of instrumental reason), but others have joined them. One would hope, however, that as fantasy became literary, non-discussions as to the artistic merit of previous bastions should really become moot (such that we can say J.R.R. Tolkien was not "snubbed", he simply didn't deserve the Nobel).

If we can reflect on the passing of magic in a fantasy text, is this more than a shallow generic narcissism? Does it point to an intriguing approach whereby the old Freudian idea allows magic to be regarded as wish-fulfillment, rather than having little to do with the "thinking through of the dialectic" that Fredric Jameson is proposing. The alienation of magic has much to do with the alienating power of technology and reason, how these forces of industry and enlightenment are regarded as inhuman, rather than as the ne plus ultra of humanity. We might make a connection here with Arthur C. Clarke's "Three Laws":

- When a distinguished but elderly scientist states that something is possible, he is almost certainly right.

- When he states that something is impossible, he is very probably wrong.The only way of discovering the limits of the possible is to venture a little way past them into the impossible.

- Any sufficiently advanced technology is indistinguishable from magic.

Labels:

fantasy,

Fredric Jameson,

literature,

science fiction,

technology

Thursday 12 January 2012

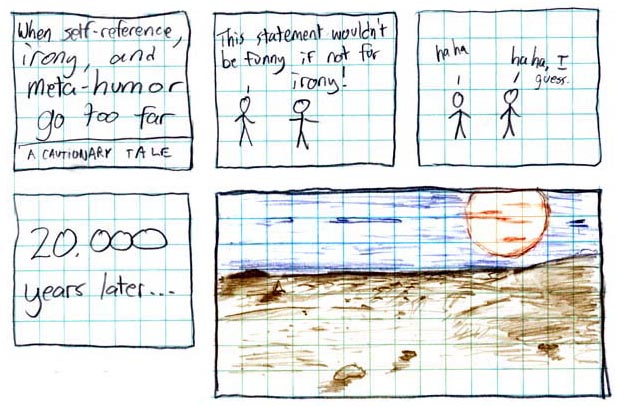

Inevitability of irony

There is a structural inevitability to all discourse, such that as it progresses, it inheres learning within itself, creating more, growing, until ultimately it begins to query its conditions of possibility. This is irony, and it is not necessarily purely out of some sort of cognitive paralysis, as irony is all too often dismissed. It is not some disease of modern thought. It is a part and parcel of all discourse. It is in fact a permanent fact of all expert systems (Fernand Hallyn, The Poetic Structure of the World: Copernicus and Kepler, pp. 20-2), literature, and indeed to the vast complex of a culture's discourse in toto. Irony is an effort to reconsider the structural presuppositions that a discourse relies upon, most likely because of a perceived need for improvement and an awareness that the discourse one is using is coming up to the limits of its usefulness. It is the intuition that a discourse could operate better, could be more precise and efficient.

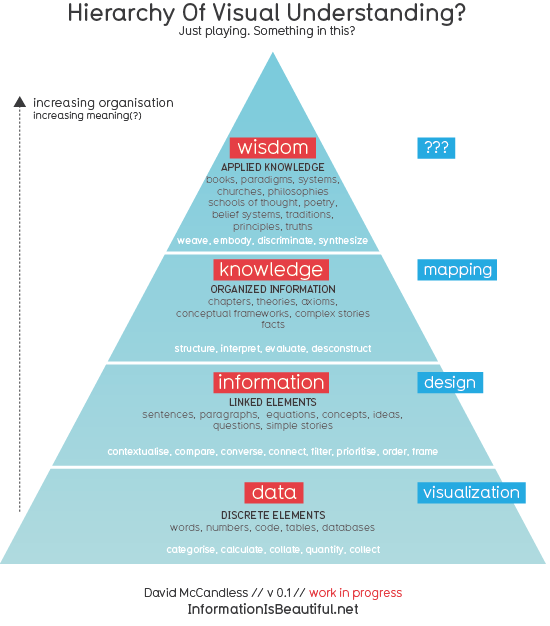

Often irony proceeds as a knowing and saying too much, as a show that one has mastered the vast amount of materials available in a given field (one cannot be ironic without this overflowing of knowledge), to the extent that jokes, puns and other diversions not only become possible but may seem necessary to maintain interest in a given topic. With this, interest ceases to be "constructive", and rather becomes a performance, and aesthetic. One is 'knowing' rather than knowledgeable. If we consider the pyramid of David McCandless below, it seems that in irony we slide back down from knowledge into information. We no longer have coordinating ideas to pull information together into a coherent structure. Irony is a kind of decoherence rather than incoherence. If we use the analogy of the stock market, then irony is a symptom of massive overvaluation, and any backlash against it such as "The New Sincerity" (see here for the neutered and here for the enthusiastic versions) is a crash, and an inevitable one (or necessary depending on your view). What emerges after this is that which has run a gauntlet of functionality, as it has demonstrated its worth via its robustness in the onslaught of critique.

Often irony proceeds as a knowing and saying too much, as a show that one has mastered the vast amount of materials available in a given field (one cannot be ironic without this overflowing of knowledge), to the extent that jokes, puns and other diversions not only become possible but may seem necessary to maintain interest in a given topic. With this, interest ceases to be "constructive", and rather becomes a performance, and aesthetic. One is 'knowing' rather than knowledgeable. If we consider the pyramid of David McCandless below, it seems that in irony we slide back down from knowledge into information. We no longer have coordinating ideas to pull information together into a coherent structure. Irony is a kind of decoherence rather than incoherence. If we use the analogy of the stock market, then irony is a symptom of massive overvaluation, and any backlash against it such as "The New Sincerity" (see here for the neutered and here for the enthusiastic versions) is a crash, and an inevitable one (or necessary depending on your view). What emerges after this is that which has run a gauntlet of functionality, as it has demonstrated its worth via its robustness in the onslaught of critique.

Wednesday 11 January 2012

The Whig interpretation of information: Is open source inevitable? I

The following is an attempt to trace some of the outlines of an element of the ideology of technology, it is not a detailed sociological analysis. The standard political model favoured by many interested in information technologies point to the supposed inevitability of openness. It is a techno-libertarianism that hovers over all discussion, our standard right-on refrain. Certainly it can be regarded as a goal, but more along the lines of a regulating ideal, rather than a Five Year Plan. This is a polite way of saying we don't back any of this up with action. The argument for openness is in its essence a historical one, which notes that once there had been an information technology that somebody attempted to control, there followed a breakdown of this control, with a free and open exchange following. I draw a parallel with Herbert Butterfield's thesis of the Whig interpretation of history, which recasts past history with a view to the present. Accordingly, an apparent chain of progress can be easily discerned from the chaos of the past, if you know what you are looking for. Such is the confirmation bias. This, however, misses the structures through which information is made available, those other technologies that have an influence - and there are many of them. In one set of terms this is how information and indeed an entire information technology is encoded. We need to confront the problem of context.

Reading the above outline (and I also think of Stephenson's Cryptonomicon mentioned previously) we must see that not all information is (or should be?) available as the ideal might hold. Indeed, openness is not a default. Were it so, should codes from history spontaneously decay into sense via some entropy of coherence? Openness is not a default because encoding is a technology. So too must we consider its recoding into openness (not decoding, which is then a contradiction in these terms). The myth of openness is based in reality, as all myths are, but it forgets TANSTAAFL, there ain't no such thing as a free lunch. This is a governing principle of information theory as much as it is thermodynamics.

According to the model I am proposing to dismiss, communication works as follows. whereby we speak openly to communicate a message, and then write this down so it is intelligible to both sides, and then it is encrypted. What this picture (or straw man, if I am totally off the wall) does not allow is that which a thoroughgoing techno-functionalist position can account for, namely the following. Speech is a form of encoding, long accepted and widely available, but a technology nonetheless. So too is writing. So too is transforming that writing into a second level of sense, namely an encoded (recoded according to my view) piece of writing, though of course it need not be writing. Codes do not degrade, they are broken. We invent a technology to extract sense from the encoded text, and thus we make it appear as though we have reversed the arrow of time, stepping into a past where the message we seek had not yet been put into that secret code which cloaks it. It almost appears as though we got that free lunch. We did not. If encryption is but another form of encoding. Encryption is as much a technology as is any other encoding. Openness too is a technology.

Is this an invalid conflation of discussing open source in the same breath as encryption? I do not believe so, and I do not compare them say they are equivalent, who cares. I do not propose indifferentism. I am saying that because there is no arrow of inevitable openness, one must actively fight for openness (with open source but the latest example of this). Pace the egotism of Julian Assange, we cannot have a knee-jerk ideology where we have a right to know everything at all times. Sissela Bok's Secrets from 1983 (currently out of print, but a contemporary New York Times review gives a fair outline) examines the issues surrounding secrecy, and notes that openness in the realm of science (which is broadly speaking where we might place the open source movement) has quite a different function to the role of secrecy in investigative journalism, whistleblowing, undercover police operations, and secrets of state (these and other issues each get their own chapter).

See also, "Is open source inevitable? II"

Reading the above outline (and I also think of Stephenson's Cryptonomicon mentioned previously) we must see that not all information is (or should be?) available as the ideal might hold. Indeed, openness is not a default. Were it so, should codes from history spontaneously decay into sense via some entropy of coherence? Openness is not a default because encoding is a technology. So too must we consider its recoding into openness (not decoding, which is then a contradiction in these terms). The myth of openness is based in reality, as all myths are, but it forgets TANSTAAFL, there ain't no such thing as a free lunch. This is a governing principle of information theory as much as it is thermodynamics.

According to the model I am proposing to dismiss, communication works as follows. whereby we speak openly to communicate a message, and then write this down so it is intelligible to both sides, and then it is encrypted. What this picture (or straw man, if I am totally off the wall) does not allow is that which a thoroughgoing techno-functionalist position can account for, namely the following. Speech is a form of encoding, long accepted and widely available, but a technology nonetheless. So too is writing. So too is transforming that writing into a second level of sense, namely an encoded (recoded according to my view) piece of writing, though of course it need not be writing. Codes do not degrade, they are broken. We invent a technology to extract sense from the encoded text, and thus we make it appear as though we have reversed the arrow of time, stepping into a past where the message we seek had not yet been put into that secret code which cloaks it. It almost appears as though we got that free lunch. We did not. If encryption is but another form of encoding. Encryption is as much a technology as is any other encoding. Openness too is a technology.

Is this an invalid conflation of discussing open source in the same breath as encryption? I do not believe so, and I do not compare them say they are equivalent, who cares. I do not propose indifferentism. I am saying that because there is no arrow of inevitable openness, one must actively fight for openness (with open source but the latest example of this). Pace the egotism of Julian Assange, we cannot have a knee-jerk ideology where we have a right to know everything at all times. Sissela Bok's Secrets from 1983 (currently out of print, but a contemporary New York Times review gives a fair outline) examines the issues surrounding secrecy, and notes that openness in the realm of science (which is broadly speaking where we might place the open source movement) has quite a different function to the role of secrecy in investigative journalism, whistleblowing, undercover police operations, and secrets of state (these and other issues each get their own chapter).

Lego: a threat to open source.

Open source as an idea as well as a movement needs to realise that because it is inextricably linked with trade and corporate secrecy (another chapter of Bok's), then for this to be more than a flash-in-the-pan, it must become part of an ecology of openness, interacting with problems such as copyright, and the knowledge economy. Indeed, regarding the former, there has been for some time an awareness of this, found in the idea of copyleft, which enacts a philosophy of pragmatic idealism, as outlined by Richard Stallman. This is not the time for micropolitics, but for a statement of intent, a declaration whereby we might make our unthought and unspoken assumptions real. One more effort, technologists, if you would become opens source!See also, "Is open source inevitable? II"

Labels:

commons,

information,

open source,

philosophy

Sunday 8 January 2012

Ideas and criticism

If an idea is presented, and elements of it are questioned in a manner that parries but does not thrust, if it is purely surface, does this in fact have any merit as criticism? A criticism ideally has a purpose, which is basically to be a form of troubleshooting. It must be specific, it must engage with the topic. To not do this is equivalent to saying "well I haven't used the software you developed, but it's probably rubbish" (we might call this The Troll's Refrain). Stand back and ask how does this help us to actually improve the idea. Even thinking 'us' is useful, because if there's a conversation, then there is a joint effort. David McCandless (author of the excellent Information is Beautiful) has a useful breakdown of how we think about the world, in the following pyramid:

In this, there is a progressive hierarchy of whittling out what is irrelevant, the old effort of separating the wheat from the chaff. This means that the bottom is a field somewhere, and so the top is presumably a Weetabix. Ideas are about turning information into knowledge, they move us from one level to the next. The non-criticism I am thinking of above seeks to move us back down, from knowledge back to information, or data. Instead of information, it focuses on noise.

There is a temptation in all debate to ask for greater and greater precision, but at the same time you have to step back and ask what level of perfection you want. If ideas are to be abstract, then they of course cannot account for everything. What we are searching for is a degree of functional 'robustness'. This is a term which Kevin Kelly makes continuous reference to in his writing (I am mainly thinking about 1995's Out of Control, which has dated far less than I would have expected), denoting that capacity for enduring networks of communication to cope with unpredictability. It is a nice antidote to management horseshit about "best practice" because it factors into its very structure that we start from least worst, and go on from there. We trade off a small degree of efficiency for a considerably greater degree of structural toughness. There will ever be noise, but not every blip is a threat to the entire system. Ideas are supposed to be solutions, and they are not to be rejected on aesthetic grounds. If it works it works, and from there we can begin the work of making our solution elegant.

In this, there is a progressive hierarchy of whittling out what is irrelevant, the old effort of separating the wheat from the chaff. This means that the bottom is a field somewhere, and so the top is presumably a Weetabix. Ideas are about turning information into knowledge, they move us from one level to the next. The non-criticism I am thinking of above seeks to move us back down, from knowledge back to information, or data. Instead of information, it focuses on noise.

There is a temptation in all debate to ask for greater and greater precision, but at the same time you have to step back and ask what level of perfection you want. If ideas are to be abstract, then they of course cannot account for everything. What we are searching for is a degree of functional 'robustness'. This is a term which Kevin Kelly makes continuous reference to in his writing (I am mainly thinking about 1995's Out of Control, which has dated far less than I would have expected), denoting that capacity for enduring networks of communication to cope with unpredictability. It is a nice antidote to management horseshit about "best practice" because it factors into its very structure that we start from least worst, and go on from there. We trade off a small degree of efficiency for a considerably greater degree of structural toughness. There will ever be noise, but not every blip is a threat to the entire system. Ideas are supposed to be solutions, and they are not to be rejected on aesthetic grounds. If it works it works, and from there we can begin the work of making our solution elegant.

Labels:

argument,

information,

Kevin Kelly,

networks

Saturday 7 January 2012

It's Kant...but IN SPACE!: or, MC Hammer versus Missy Elliot.

"We here propose to do just what Copernicus did in attempting to explain the celestial movements. When he found that he could make no progress by assuming that all the heavenly bodies revolved round the spectator, he reversed the process, and tried the experiment of assuming that the spectator revolved, while the stars remained at rest. We may make the same experiment with regard to the intuition of objects."

- Immanuel Kant, Critique of Pure Reason, Preface to the Second Edition [B xv], Meiklejohn trans.

In any discussion of either Kant of the philosophy of the Enlightenment, this idea is one of the first we encounter. It is either noted simply as his "Copernican revolution", or it is glossed in terms of scientistic hubris and a dangerous precedent for thought. I had regarded it more as the latter the first time I encountered this text, but upon reading it now, it has a decidedly different tone, one which causes me to silence the part of me that recognises and acknowledges metaphor when I encounter it. Instead, I think I am only just now getting what Kant is actually saying here. This language is considerably less figurative than I had at first thought (I may simply be slow, I make no claims to novelty here). It is not simply a rhetorical flourish; the extended metaphor of the successive governments of the queen of the sciences in the first preface illustrates that, at any rate, if Kant goes to the effort of devising a metaphor, he'll get his thaler's worth. Admittedly, he is not regarded as a master stylist, and if ever the joke "I am sorry for this being so long, but I hadn't the time to make it any shorter" applied to a philosopher, well... Kant was it.

- Immanuel Kant, Critique of Pure Reason, Preface to the Second Edition [B xv], Meiklejohn trans.

In any discussion of either Kant of the philosophy of the Enlightenment, this idea is one of the first we encounter. It is either noted simply as his "Copernican revolution", or it is glossed in terms of scientistic hubris and a dangerous precedent for thought. I had regarded it more as the latter the first time I encountered this text, but upon reading it now, it has a decidedly different tone, one which causes me to silence the part of me that recognises and acknowledges metaphor when I encounter it. Instead, I think I am only just now getting what Kant is actually saying here. This language is considerably less figurative than I had at first thought (I may simply be slow, I make no claims to novelty here). It is not simply a rhetorical flourish; the extended metaphor of the successive governments of the queen of the sciences in the first preface illustrates that, at any rate, if Kant goes to the effort of devising a metaphor, he'll get his thaler's worth. Admittedly, he is not regarded as a master stylist, and if ever the joke "I am sorry for this being so long, but I hadn't the time to make it any shorter" applied to a philosopher, well... Kant was it.

Fail.

He is not attempting to point out that he is on the same level of awesomeness as Copernicus, nor is he attempting to arrogate some scientific kudos for critical philosophy. He is rather no more than noting that he is effecting a similar change of perspective. I assume that if one knows the name Copernicus, one understands that he was responsible for kicking off the modern shift in science away from an Aristotelian/Ptolemaic or geocentric conception of the universe (where the Earth stands at the centre, and everything else wheels off elsewhere with its epicycles and epicycles of epicycles &c.) to the modern, heliocentric model. With Copernicus and those who followed, we were no longer it. We were no longer the centre of the universe, except in our own feverish egotistical imaginings.

Kantian.

Another of Kant's famous sentences, and one that he arranged that should be engraved on his gravestone is the following: "Two things fill the mind with ever-increasing wonder and awe, the more often and the more intensely the mind of thought is drawn to them: the starry heavens above me and the moral law within me." The former is what concerns me more, even though the moral element is what gets more press (as much as transcendental critique gets attention these days). Nature and science were hugely important to Kant, and he was no mere dilettante. Accordingly, he had a proper understanding of what Copernicus had done. Where before astronomy had put humanity at the centre, the new heliocentric model did away with this, and in the process exposed our limitations. We did not know everything. Aristotle was not correct in all things (this was a serious epistemological threat to the church, thus the Inquisition requesting the pleasure of Galileo's company).

Basically Kant does the same thing for philosophy as Copernicus: he put our conceptual model down on the page, flipped it, and reversed it. He pointed out that our assumptions about how we engage with reality are what mislead us, and so instead of doing what philosophy and metaphysics had been doing for so long (namely "when you encounter a problem, make a distinction") is the wrong approach. As Abraham Maslow said, if you only have a hammer, you tend to see every problem as nail. One way of putting it might be that Kant wanted us to reconsider not just our conceptual toolbox, but also the problem/solution dichotomy. By placing our knowledge and the attempt to critically think through our means of knowing in the context of the universe as a whole with us as but an element, the possibility of an entirely new perspective and way of thinking presents itself.

Wednesday 4 January 2012

Too legit to quit

The obsession with legitimacy is the original recursive argument of political and philosophical debates. It sets off from an ideology of origination that skews all subsequent discussion and investigation. Thus in many ways the appeals to something's being self-evident is a stunningly original departure (much as the experience of reading Descartes and coming across the cogito ergo sum for the first time). It denies the dominion of the past, saying that our ever-present (N.B.) capacity for thought and invention is necessary, if not always sufficient.

The difficulty with removing legitimacy is that a thing must be allowed to grow and change, lest what it is now become but another foothold for those attempting to climb back to the days of yore in the assumption that older=better. (This is why the argument of a type of infallible, original intent interpretation of the U.S. constitution is the most perverse of all, attempting to be both historical and yet valid for all time.) If we consider a thing to be growing and alive, then this must imply that it will eventually pass out of being. Legitimacy should be considered according to what we want now and in the future, considering whether this tallies with the past. If it doesn't, why not? If not, do we do away with it?

The difficulty with removing legitimacy is that a thing must be allowed to grow and change, lest what it is now become but another foothold for those attempting to climb back to the days of yore in the assumption that older=better. (This is why the argument of a type of infallible, original intent interpretation of the U.S. constitution is the most perverse of all, attempting to be both historical and yet valid for all time.) If we consider a thing to be growing and alive, then this must imply that it will eventually pass out of being. Legitimacy should be considered according to what we want now and in the future, considering whether this tallies with the past. If it doesn't, why not? If not, do we do away with it?

Sunday 1 January 2012

In your own time

Take the point made below about timescales, but reverse it. This is

the more accurate representation of how things stand in terms of

influence. Our own particular, partisan approaches require that things

be presented in the manner I first suggested, but the more exhaustive

reading indicates that the reverse is the case. It seems a minor point,

but it is crucial to the presentation of the argument. Here's why.

In this change of timescales throughout history, the fragmentation of time has taken place just as the individual has risen in importance. Fragmentation here isn't necessarily a bad thing. We can simply observe that from the introduction of clocks in villages and towns, allowing for a more precise breakdown beyond the pace of the ringing of church bells for services, up to the industrial age and the ascent of the pocketwatch (and all the social effects of this) ever smaller divisions of time have become possible. As a result, the grand timescales of dynasties and institutions are now seen as an aggregate of phases, of years and decades and centuries. As such, the long view is no longer regarded as an organic or collective entity unto itself.

Consider this with regard to the phenomenon of European aristocratic dynasties. The Habsburg family (with all its various cadet branches included) claims to trace itself back to the 10th century, and from here they married their way to a continental supremacy that convulsed Europe in war and ruin over the centuries. It would not be fruitful to consider their history in terms of individuals, as our sense of time is different to theirs.

Their coat of arms is a testament to the various alliances, co-options, annexations, marriages, and outright thefts that consolidate such a dynasty as a supra-individual entity. In these terms, we must consider the context of time and timescales. By leaving out the collective entity of time, we miss something. In his "Idea for a Universal History from a Cosmopolitan Point of View", Kant points out in his second thesis that "those natural capacities which are directed to the use of [...] reason are to be fully developed only in the race, not in the individual." Ignoring the use of the word 'race' here with it's chauvinistic implications, we see that there is an awareness of the different implications of different timescales. One is not superior to another, but has rather a different function.

In this change of timescales throughout history, the fragmentation of time has taken place just as the individual has risen in importance. Fragmentation here isn't necessarily a bad thing. We can simply observe that from the introduction of clocks in villages and towns, allowing for a more precise breakdown beyond the pace of the ringing of church bells for services, up to the industrial age and the ascent of the pocketwatch (and all the social effects of this) ever smaller divisions of time have become possible. As a result, the grand timescales of dynasties and institutions are now seen as an aggregate of phases, of years and decades and centuries. As such, the long view is no longer regarded as an organic or collective entity unto itself.

Consider this with regard to the phenomenon of European aristocratic dynasties. The Habsburg family (with all its various cadet branches included) claims to trace itself back to the 10th century, and from here they married their way to a continental supremacy that convulsed Europe in war and ruin over the centuries. It would not be fruitful to consider their history in terms of individuals, as our sense of time is different to theirs.

Their coat of arms is a testament to the various alliances, co-options, annexations, marriages, and outright thefts that consolidate such a dynasty as a supra-individual entity. In these terms, we must consider the context of time and timescales. By leaving out the collective entity of time, we miss something. In his "Idea for a Universal History from a Cosmopolitan Point of View", Kant points out in his second thesis that "those natural capacities which are directed to the use of [...] reason are to be fully developed only in the race, not in the individual." Ignoring the use of the word 'race' here with it's chauvinistic implications, we see that there is an awareness of the different implications of different timescales. One is not superior to another, but has rather a different function.

Subscribe to:

Posts (Atom)